Cooling latest AI chips with liquids

Introduction

In 1971, Intel revolutionized the world by launching the first commercial microprocessor, the ‘4004’. This chip boasted 2,300 transistors in a 16-pin DIL package and consumed less than half a watt from its 15V power rail. It heralded the start of the modern era of mass computing, although at $60 it wasn’t cheap—that’s equivalent to around $460 in today’s money.

At the time, the processor design engineers understood the relationship between speed and power consumption, but thermal design was not a concern. The IC remained barely warm even when running flat out at its maximum clock speed of 740kHz. Engineers were also unconcerned about the power rail’s 5% tolerance, and with the IC typically drawing only 30mA, voltage drop along tracks was negligible. This allowed the simple power source to be placed wherever it was most convenient.

Contrast this with the latest AI-positioned processors such as the Nvidia GB200 ‘Superchip’ with 200+ billion transistors and dissipating over 2.5kW peak with a sub-1V power rail that must deliver thousands of amps. Now, the ability to keep the die temperature within safe limits using some form of cooling has become a critical factor in key data center metrics—processing performance, server footprint, power usage effectiveness (PUE), cost effectiveness, and environmental impact. Additionally, power rails must be provided by high-efficiency DC/DC converters located directly at the processor to minimize voltage drops.

Up to a certain power level, heat generated by processors is typically managed using forced air. The die is thermally connected to a heat spreader plate on the top of the IC, which in turn is attached to a finned heatsink either directly or through simple heat pipes connected to an adjacent heatsink. Airflow from system fans then carries heat away, typically exhausting into the local environment. Building air conditioning then removes this heat from the broader environment, though at a considerable energy cost. The energy used for cooling is reflected in the PUE metric, which is the total energy used divided by IT energy, with a value of 1.5 being common. As many data centers now consume over 100 MW (1), potentially a third of this energy is used in cooling, which represents a massive cost and has a significant environmental impact.

Liquid cooling is the only practical solution at high power

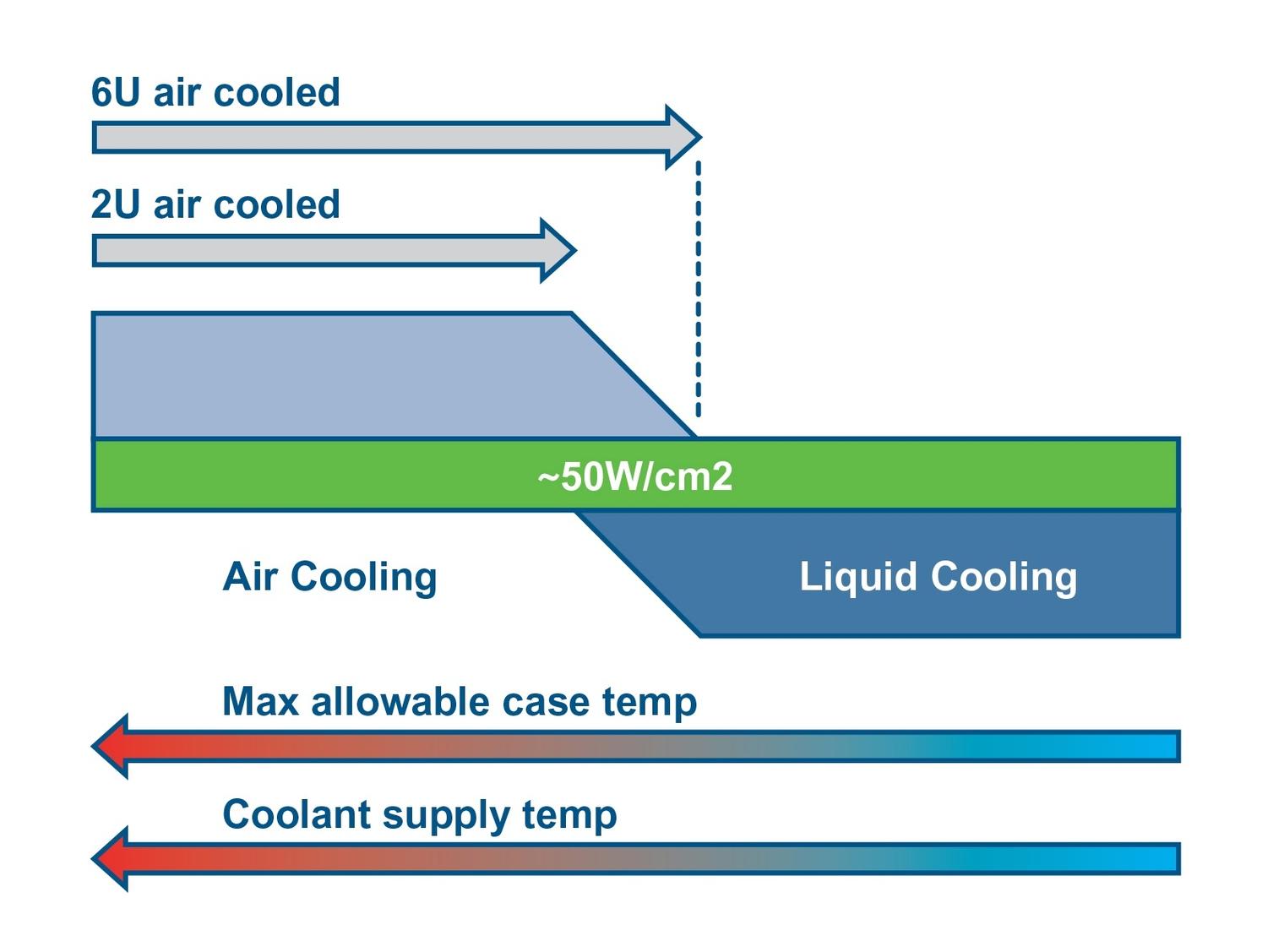

Transferring heat through the movement of a given volume of liquid is far more efficient than through the same volume of air – by a factor of about 3,600 for water. This makes liquid cooling through the die heat spreader a highly effective method. It is generally necessary when heat dissipation exceeds around 50 W per cm2 of die area. Given that the GB200 has an estimated area of about 9cm2, any dissipation over 450 W indicates the need for pumped liquid cooling.

Picture 1: Liquid cooling of chips is needed above approximately 50 W / cm2 dissipation.

In ‘Direct-to-Chip’ cooling, the liquid is routed through channels in a cold plate attached to the chip’s heat spreader via a thermal interface. When the liquid does not evaporate during the process, it is referred to as ‘single-phase’ operation, in which the medium, typically water, is pumped through a heat exchanger cooled by fans. JetCool, a Flex company, offers direct-to-chip liquid cooling modules that use arrays of small fluid jets that precisely target hot spots on processors, transforming high-power electronic cooling performance at the chip or device level. More information can be found at https://jetcool.com/

Alternatively, heat can be transferred to a second liquid loop, which can provide hot water to the building and potentially to local consumers. A two-phase operation offers better heat transfer, by allowing the liquid, typically a fluorocarbon, to evaporate as it absorbs heat and then re-condense at the heat exchanger. This method can provide a dramatic improvement in performance. However, system fans are still needed for cooling other components, although some, like the DC/DC converters, can be integrated into the liquid cooling loop using their own baseplates. This aligns with the ‘vertical power delivery’ concept, where DC/DC converters are positioned directly below the processor to minimize voltage drops. A practical limitation of the direct-to-chip approach is the thermal resistance of the interface between the chip and the cold plate. Precision flatness of the surfaces and high-performance paste are necessary but at the multi-kilowatt level, the temperature differential can still be problematic.

This constraint seems to be an impending limit on heat dissipation, and, consequently, on performance. As a solution, immersion cooling can be considered. Here, the whole server is placed in an open bath of dielectric fluid pumped via a reservoir around a loop to a heat exchanger. Again, two-phase operation is possible for the best performance.

Those Intel engineers in 1971 would have been astonished by the performance levels achieved in data centers in 2024. But is there a cliff edge coming? There are practical limits to chip feature size and temperature increase, as well as constraints on energy supply and environmental impact, especially if performance continues to rely on simply replicating hardware.

Ultimately, investors seek a return on their investment. Given the extreme complexity of cooling, the high energy costs, and the expensive chip acquisition—such as the GB200 chip reportedly costing up to $70,000 each—commercial viability could soon become a pressing issue. Maybe AI will tell us what the solution is.

References:

(1) https://www.sunbirddcim.com/in...