Meeting the power demands of always-on data centers

As you’re no doubt aware, ‘the cloud’ describes the collective code being run on computers in data centers and server farms. Whether it’s consumers storing their photos in iCloud or Google Drive, or businesses running their hosted systems, someone has to manage the data center that’s providing the service. But what does today’s boom in cloud computing mean for the power supply and conversion sub-systems in all those data centers?

Power demands on the up

According to Gartner, cloud computing has become the ‘new normal’, with the COVID-19 pandemic giving it a further boost. End-user company spending on public cloud services is expected to total $304 billion in 2021[1]. IDC quotes similar figures, with a 24 % annual growth rate. The data centers providing these services typically run 24/7, and users expect them to be constantly available, whatever the time of day.

At the same time, security threats continue to grow – which means that there’s an increased need for proactive, ‘always-on’ protection, as well as more demand for backups that can be available quickly. Running anti-virus and threat protection software, as well as encryption and decryption, all add to the load on servers.

For the big providers such as Amazon Web Services and Microsoft, minimizing downtime is a key competitive advantage. In practice, this often means running two data centers in an ‘active-active’ configuration, with the backup systems ready to go immediately if there’s any problem at the main site, or even moving to a three-data-center topology[2].

The performance of the servers and storage in the data centers is increasing, and there’s a demand for more compact systems that reduce the floor space needed, and hence cost. This trend towards smaller, denser IT means power systems must also be as compact as possible – while maximizing efficiency to keep power and cooling costs down.

In fact, the overall power consumption of data centers has stayed broadly constant over the past few years, as efficiency improvements have balanced out the performance increases. For example, virtualization has meant that CPU, memory and storage are much more effectively utilized, and less power wasted on under-used equipment. While some estimates are that data centers use as much as 12 % of the UK’s electricity[3], the global figure is often put at somewhere between 1 and 2 % - with a correspondingly huge issue in terms of CO2 emissions.

Figure 1: Modern data center racks

Power system architectures

We’ve established that there’s a requirement for power systems to provide higher power outputs, while minimizing the physical space they take, as well as increasing their efficiency.

Power system vendors have taken various approaches to meet these demands. Traditional data center power architectures would provide an individual AC supply to each blade in a rack, where it would be locally converted for each load. Instead, an emerging trend is to handle AC-DC conversion in bulk, perhaps with just one converter system per rack. The resulting DC current is then distributed to each blade.

With the growth we see in total rack power requirements, there’s also a trend from 12 V DC to 48 V DC distribution. By increasing the voltage fourfold, the current needed to deliver the same amount of power can be similarly decreased, reducing losses by a factor of 16 and avoiding the need for large, expensive power cables.

The 48 V DC supply to a board is often converted first to 12 V by an Intermediate Bus Converter (IBC), and then by a local Point of Load (PoL) converter to the low voltage required by particular components. This two-stage architecture provides high efficiency and can deliver high currents if required, or conversely can provide low currents in a cost-effective system.

Another approach, called direct conversion, uses a single stage to convert the 48 V supply voltage directly to the low voltage required by a load, which may well be 2 V or less. By keeping the power supply at the higher 48 V voltage right up to the load, this can be helpful where high current is required, for example for high-end processors, ASICs and FPGAs. Whether two-stage or direct, today’s DC-DC converters typically include sophisticated digital control, for example via the industry-standard PMBus.

New power devices in practice

Let’s look at an example device: the BMR492 from Flex Power Modules. This is a high-power DC/DC converter designed for data center applications, which delivers a 12 V output from a nominal 48 V input voltage. It can provide a peak output of up to 1100 W for short periods of up to a second, making it well-suited to the ‘burst mode’ power demands of modern CPUs, as well as achieving high efficiency of up to 97.4 %.

While these figures are impressive, the key step forward with the BMR492 is to deliver such results in a compact package. In fact, it uses the industry-standard ‘eighth-brick’ format (measuring just 58.4 x 22.7 x 13.2 mm) – and provides power output and performance previously only possible in larger quarter-brick devices.

Figure 2: BMR474

One approach for the downstream Point of Load (PoL) is to use a compact, vertically-mounted SIP (single inline package) format, which minimizes the board space occupied. For example, the BMR474 from Flex Power Modules is a digital PoL DC/DC converter, which provides an output of between 0.6 V and 3.3 V, taken from an input in the range 6 V to 15 V. Efficiency is 95.1 % at full load, with 12 V input voltage and 3.3 V output.

With its vertical SIP mount design, the BMR474 has a small footprint of only 2.84 cm2, thus using a minimum of board space. It can still deliver up to 80 A of output current to meet the needs of typical data center loads.

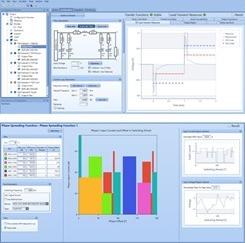

Figure 3: Example screenshots from Flex Power Designer

As well as their excellent electrical specifications, the BMR492 and BMR474 offer superior thermal behavior, with options for conduction or convection cooling, as well as robust over-temperature protection. Design engineers can optimize both electrical and thermal performance by using Flex Power Designer (FPD), a free software tool.

Conclusion

Data center performance is climbing, but at the same time, systems are becoming more compact, while demands for 24x7 uptime and growing security threats create their challenges. All the while, there are downward pressures on electricity consumption.

For power system design engineers, this may at first appear an unassailable feat. But new architectures and components are here to help resolve design challenges – ensuring our ever-growing demand for cloud services can be met.